» About » Archive » Submit » Authors » Search » Random » Specials » Statistics » Forum » RSS Feed Updates Daily

No. 1054: Gar-Lightfield

First | Previous | 2012-04-07 | Next | Latest

First | Previous | 2012-04-07 | Next | Latest

Permanent URL: https://mezzacotta.net/garfield/?comic=1054

Strip by: David Morgan-Mar

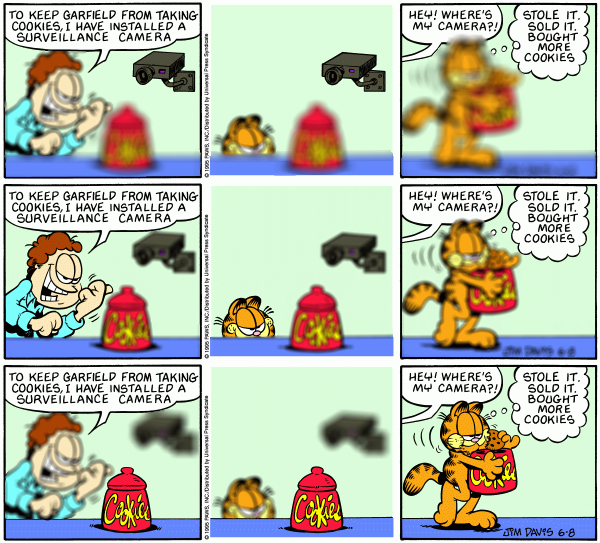

Jon: To keep Garfield from taking cookies, I have installed a surveillance camera.

{Garfield pops up and eyes cookie jar}

Jon: Hey! Where's my camera?!

Garfield: Stole it. Sold it. Bought more cookies.

The author writes:

Admin note: Due to limitations in the site coding, the full interactive experience of this strip is presented here in the comment section. Try clicking on different things.

In late February, 2012, start-up company Lytro released its first consumer level lightfield camera, placing the technology of lightfield photography into the hands of ordinary people.

A normal digital camera records light with a light-sensitive electronic image sensor divided into tiny pixels. The lens of the camera focuses an image of the scene being photographed on to the image sensor. Each pixel on the sensor simply records the total amount of light hitting it during the exposure. Replace the electronic sensor with a chemically photo-sensitive film or plate, and this is the way photography has been done for over a hundred years.

One of the fundamental features of lenses is that they focus objects at a specific distance. There is a limited range of distances around this ideal focal distance which looks acceptably sharp to human eyes, and beyond that range objects start to look blurry. So if you focus a camera on your cat, the trees in the background distance look out of focus, and vice versa. The "acceptably sharp" distance range is referred to as the depth of field, and you can change it, within limits, by adjusting the camera aperture. With a narrow enough aperture, you can get almost everything looking fairly sharp. This is great for landscape photographers, but not so good for portraits or sports photos, where you want the background to look blurry, so as not to distract from the person or the action that is the focus of your shot.

So typically many photographers set a fairly small depth of field, and then trust their focusing ability (or the camera's autofocus) to focus sharply on the right object. Sometimes this backfires, and you end up out of focus, or focused on the wrong thing. Wouldn't it be good if you didn't have to worry about focusing at all? If you could just take a photo, and then later decide what object you want to focus on?

Enter the lightfield camera. What's a lightfield, I hear you ask? Excellent question!

The data that comes out of a digital camera sensor is an image. We're all familiar with images. Technically speaking, a digital image is an array of pixels, each pixel containing a measure of the brightness and colour of the image at that point. If you display or print all of the pixels in the right order, and the pixels are small enough, the result looks like a picture, or a scene, or whatever. An image contains representations of objects that may be in focus (and nice and sharp), or out of focus (and blurry). The problem with an image is that once the light from an object becomes blurred, information is lost and you can't unblur it again. (For the tech-heads, there is a mathematical method called deconvolution which can attempt to deblur a blurry image, but in general it's not enough to unambiguously recover the lost information. "Enhance" button on CSI-style TV shows notwithstanding.)

To refocus a normal camera, you twiddle with the lens. What this does is to move the lens further away from or closer to the image sensor. It's the distance between the lens and the sensor that governs what range of objects in front of the camera is in focus. This is because the light coming through the lens from objects at different distances focuses behind the lens at different distances. The light from your cat focuses at one distance behind the lens, while the light from the trees in the distance focuses at a different distance behind the lens. So to focus on your cat, you move the lens so that the sensor is at the spot where the light from your cat is in focus. At this spot, the light from the trees is out of focus. If you wanted to focus on the trees, you'd move the lens so that the sensor is at the spot where the light from the trees is in focus, and at this spot the light from your cat is out of focus.

What if you could capture not just the total of all the light at each pixel in your photo, but you could split it up so you'd record the direction that each bit of light is coming from? Then if you accidentally focused on the trees and your cat was all blurry, the blurry pixels of your cat would contain the intensity and the directions of the incoming light from your cat. Using some geometry, you could plot the path of all the light rays back through the camera, and figure out where in the camera the sensor should have been to take a nice sharp photo of your cat. And, since you know the intensity and direction of all the light rays, so you can calculate what that photo would have looked like if the camera sensor had been there, instead of where it actually was.

This is lightfield photography. The record of all of the light intensities and directions at each pixel is the lightfield. It's more than just a 2-dimensional image (image intensity at each pixel), it's a 4-dimensional collection of data (at every single pixel you have a 2-dimensional record of incoming light directions). The cool thing is, over the last 10 years or so it has become possible to manufacture image sensors that will capture this lightfield data. Optics and photography researchers have been playing with this for several years now, but finally it's at the point where it is in a consumer device.

And what exactly does a lightfield camera let you do? Well, you can snap a photo of anything you like without focusing. Then afterwards, you can use software to process the lightfield data and produce an image, where the focus is anywhere you want it to be. This is a new and unusual way of doing photography, and it will take some time to catch on. The first generation Lytro camera is not very high resolution and the resulting photos look okay for the web, but strange when blown up large. And the workflow of fiddling with focus after taking your photos seems bizarre to most keen photographers. But it's exciting and the technology will only get better.

The end result of a Lytro photo can be seen in the examples on their website. You can click on various areas of the photo and see different things come into and out of focus.

So... what if Garfield was captured with a Lytro camera?

[[Original strip: 1995-06-08.]]

Original strip: 1995-06-08.